Kettle recently switched over from accessing the file system via standard Java libraries to using the Apache VFS libraries. So what does that mean? Why does that matter? Well, it really opens up a whole slew of deployment options, and provides even more options for managing Kettle code. You can now manage your actual ETL jobs and transforms in Zipfiles, on Web Servers, FTP servers, WebDav locations, etc. Basically, you can making Kettle even thinner than it is right now.

For instance, you can now run Kettle ETL jobs with ONLY the base Kettle installation and a remote URL. Consider the following example.

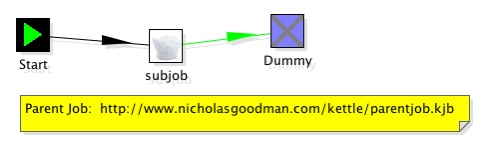

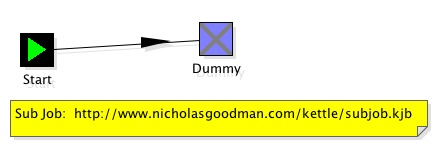

I have two Kettle jobs:

http://www.nicholasgoodman.com/kettle/parentjob.kjb

-AND –

http://www.nicholasgoodman.com/kettle/subjob.kjb

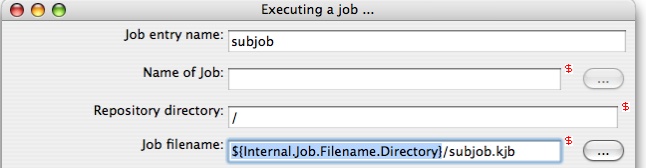

The parent job, does something very simple: It executes the subjob.kjb using relative addressing. You can use the well known and supported ${Internal.Job.Filename.Directory} variable so that you don’t have to hard code the physical location of another jobs/transform/datafile/etc. In this case, it doesn’t matter if the subjob is on the local file system or on a webserver. The dialog to setup the relative addressing looks like this:

Now, using nothing but the standard Kettle 2.5.0 download I can execute these two jobs without any client side ETL Jobs or Transforms.

./kitchen.sh -file=http://www.nicholasgoodman.com/kettle/parentjob.kjb

17:42:30,075 INFO [Kitchen] Kitchen – Start of run.

17:42:30,644 INFO [Kettle] Kettle – Reading repositories XML file: /Users/ngoodman/.kettle/repositories.xml

17:42:30,647 ERROR [Kettle] Kettle – Error opening file: /Users/ngoodman/.kettle/repositories.xml : java.io.FileNotFoundException: /Users/ngoodman/.kettle/repositories.xml (No such file or directory)

ERROR: No repositories defined on this system.

2007/05/28 17:42:30:695 PDT [INFO] DefaultFileReplicator – Using “/tmp/vfs_cache” as temporary files store.

17:42:31,991 INFO [Thread[parentjob (parentjob (Thread-2)),5,main]] Thread[parentjob (parentjob (Thread-2)),5,main] – Sleeping: 0 minutes

17:42:31,992 INFO [parentjob] parentjob – Starting entry [subjob]

17:42:32,133 INFO [Thread[subjob (subjob (Thread-3)),5,main]] Thread[subjob (subjob (Thread-3)),5,main] – Sleeping: 0 minutes

17:42:32,134 INFO [subjob] subjob – Starting entry [Dummy]

17:42:32,135 INFO [subjob] subjob – Finished jobentry [Dummy] (result=true)

17:42:32,233 INFO [parentjob] parentjob – Starting entry [Dummy]

17:42:32,234 INFO [parentjob] parentjob – Finished jobentry [Dummy] (result=true)

17:42:32,234 INFO [parentjob] parentjob – Finished jobentry [subjob] (result=true)

17:42:32,235 INFO [Kitchen] Kitchen – Finished!

17:42:32,235 INFO [Kitchen] Kitchen – Start=2007/05/28 17:42:30.630, Stop=2007/05/28 17:42:32.235

17:42:32,235 INFO [Kitchen] Kitchen – Processing ended after 1 seconds.

Just another nice feature that allows even more interesting ways to manage a deployment of ETL jobs and Transforms. Great work Kettle team!

hi,

this is pradeep, i want to intigrate 10 tables into one single out put table,so how to intigrate those tables plz give some example.