I’m often asked, as an initial question of why LucidDB can perform so much better than traditional row store databases like Oracle, SQLServer, DB2, MySQL is HOW?

There’s a bunch of reasons and we have entire sections of our Wiki dedicated to the topic, but first and foremost is corner stone of almost every analytic database. We’ve changed the way we orient the data on disk. I’m not going to go into too much detail here, but I think as a start, a very simple example can help gain a little more understanding about how we can deliver an order of magnitude better performance over a row store. More to come, as well.

Take the following query that is typical of BI workloads (we’re using commercial flight data available from the BTS):

select count(Carrier), Carrier, DayOfWeek, CancellationCode

group by Carrier, DayOfWeek, Cancellation

This query is typical – we want some aggregation (count of flights) grouped by several qualifying attributes (Carrier, DayOfWeek, CancellationCode). We need to examine (in our example dataset) approximately 63 million records to answer this question. In other words, we’re looking at all the data in a table to answer the query.

In a row store, even with indexes, a query that needs all the data from the database needs to touch all the data in the table. This means THE ENTIRE TABLE is accessed from an I/O perspective. Even though only a few columns or bits of data might be used, the ENTIRE row (that contains all the column data) is accessed off disk.

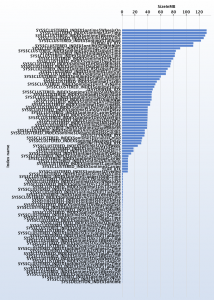

Let’s take our 63 million record table, that has approximately 99 columns. Assuming (and this is bad assumption) that the row store takes the exact same amount of storage as a column store, the total table size is 3,025 MB. In the row store, the data stored in rows is stored in blocks and is relatively uniform (ie, approximately 1000 rows / blocks and stored in 63,000 blocks). In a column store, we store the columns separately. Our storage for the same 3,025 MB breaks down like this (total is still 3,025 MB).

NOTE: Sorry for the graph labels! Sort of jumbled!

As you can see, some columns still continue to take up a fair amount of space (120MB +) but other columns are much much smaller. The smaller columns are ones where values are repeated often (Year, Carrier, etc).

Here’s the gist. Remember our SQL query typical in BI systems?

select count(Carrier), Carrier, DayOfWeek, CancellationCode group by Carrier, DayOfWeek, Cancellation

The SQL statement only access 3 columns (Carrier, DayOfWeek, CancellationCode).

In a Row Store, the database has to read the ENTIRE table from disk (3025 MB)

In a Column Store, the database only reads the columns it needs from disk (44 MB)

The column store is doing almost 1/100th of the work of the row store! 44 MB vs 3025 MB!

It isn’t magic. It isn’t some magical breakthrough in CPU technology.

We’ve simply changed how we’re storing the data on disk so that asking the same question (SQL) on the same data (63 million rows) does far less work (44 MB vs 3025 MB) to give the same answer.

I’ll follow up with more on the this and other topics, but I hope this helps explain a very very basic reason of how LucidDB can deliver such fantastic improvements over otherwise very well performing OLTP databases like Oracle.