Real time. Roland has built a cool Pentaho application that collects data from MySQL performance tables and metadata views and shows reports on this performance, errors, etc. Cool stuff for DBAs.

Author Archives: ngoodman

MySQL Archive Tablespace for FACTs

I’m visiting a Pentaho customer right now whose current “transaction” volume is 200 million rows per day. Relatively speaking, this puts their planned warehouse in the top quintile of size. They will face significant issues with load times, data storage, processing reliability, etc. Kettle is the tool they selected and it is working really well. Distributed record processing using Kettle and a FOSS database is a classic case study for Martens scale out manifesto.

This organization doesn’t have unlimited budget. Specifically, they don’t have a telecom type budget for their telecom like volume of data. One of the issues that has come up with their implementation has been the tradeoff between space, and keeping the base level fact records. For example, at 200 million / day and X bytes per fact you start to get into terabytes of storage quickly. It was assumed, from the start of the project, only summary level data could be stored for any window of time exceeding 10 days or so.

The overall math is sound.

Size per record (S) x number of records per day (N) = size per day of data growth (D)

In this equation, there’s really not much we can do, if we want to keep the actual base level transaction, about the number of records per day. N becomes a fixed parameter in this equation. We do have some control over the S value, which is mostly about what this blog is about.

Can we reduce the size of S by such an amount that D becomes more realistic? The answer is the ARCHIVE engine in MySQL.

I created the DDL for a typical fact record. Few Dimension IDs and a few of numeric values.

CREATE TABLE test_archive

(

DIM1_ID INT

, DIM2_ID INT

, DIM3_ID INT

, DIM4_ID INT

, DIM5_ID INT

, NUMBER1 FLOAT

, NUMBER2 FLOAT

, NUMBER3 FLOAT

, NUMBER4 FLOAT

) engine=ARCHIVE

;

I did this in MyISAM as well

CREATE TABLE test_myisam

(

DIM1_ID INT

, DIM2_ID INT

, DIM3_ID INT

, DIM4_ID INT

, DIM5_ID INT

, NUMBER1 FLOAT

, NUMBER2 FLOAT

, NUMBER3 FLOAT

, NUMBER4 FLOAT

) engine=MyISAM

;

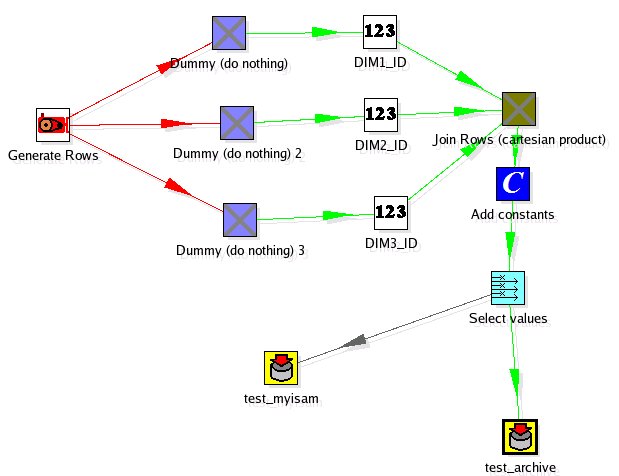

I used a simple Kettle transformation to populate both the these tables with 1 million rows of data. It wasn’t scientific but the INSERT performance of the Archive and MyISAM tables were very similar (within 10% of the throughput of rows).

So now we have a million rows, with a reasonable FACT record format. How much space do these 1 million rows require to store?

+--------------+---------+---------------+------------+

| table_name | engine | total_size_mb | table_rows |

+--------------+---------+---------------+------------+

| test_archive | ARCHIVE | 2.63 | 1000000 |

| test_myisam | MyISAM | 36.24 | 1000000 |

+--------------+---------+---------------+------------+

The Archive table uses an order of magnitude LESS space to store the same set of FACTS. What about query performance? I’ll have to do another blog on a more scientific approach but the anecdotal query performance on typical OLAP queries (select sum() from fact group by dim1_id) seemed related (less than a 15% difference).

Let’s be clear here, one MUST have a good aggregate table strategy so the fact records are RARELY accessed because the performance will not rock your world. However, this is the case with these volumes anyhow. Summaries and Aggregates have to be built for the end user to have a good experience on the frond end.

Archive engine is strange. INSERT/SELECT only. You can’t do ANYTHING with it, except drop and recreate it. For historical fact loads on “settled” DW data segmented on a daily basis this is usually not an issue. No transactions need, no DML needed. I’d also like to see how this compares to “packed” MyISAM tables at some point. I’m guessing I wouldn’t expect to see it beat the compression in terms of storage space, but there’s some nice things you can do with MyISAM tables (for instance, MERGE).

Back of the napkin calculation to handle BIG data volume:

2.63 MB / million * 200 per day = 526 MB / day

526 MB / day * 365 days = 187.5 GB

Anyone else have fun experiences to share with the Archive engine?

Side Note: I did all this testing on a VNC Server enabled EC2 instance I use for testing. Fire it up, VNC in, do my testing, shut it down. I LOVE EC2!

Why I don't have a .sig on email

One of my pet peeves is an email thread that grows 100 lines with every “Sounds good to me” reply. You know what I’m talking about.

10 screens of text, with about 1 screen of actual content/conversation.

All these logos and titles, fax numbers, clever logans and sayings, etc. AHHHH….

It’s a networked world, it doesn’t have to be on EVERY SINGLE EMAIL RESPONSE. If you want to get in touch with me, you can google me and immediately find my site, etc.

I’m Twitter’ed, LinkedIn, YahooMessenger, AIM, MSN, skype, etc. I’m easy to get a hold of, you don’t need to have 10 copies of ALL MY CONTACT INFO in an email.

Why "web 2.0" works

There’s an intersection of value at

a) products that are web service and plugin enabled

b) companies that provide interesting “net effect” services

For instance, tonight my hosting provider, Dreamhost, emailed and said that my feed was being hit so extensively that I was causing service interruptions on the other accounts on the machine.

First, to the other sites, and I have no idea who you are but I’m sorry!

Second, I was able to leverage a web 2.0 – ish service and plugin to instantly alleviate the pain (with a cached redirected version of my feed) and now I’m getting some cool extra stats on my RSS (who/how/what). All in < 30 minutes.

Because my blogging software works in the networked world, things happen easier and more naturally than me having to hack around a bunch of code special scripting/patching on my website. Plugins/Service/etc. It’s a grand new world.

PS – I guess I’m over that whole “bloggers block” thing. Spouting out useless crap on my blog again.

Meet me in San Francisco: Pentaho Training

I am going to be the instructor for the Operational Business Intelligence course in April. This particular course digs into the reporting tools, scheduling, processes, design, etc. Basically, everything you need to build a reporting-centric solution using Pentaho.

Operational Business Intelligence – San Francisco, CA

303 Twin Dolphins Drive

Suite 600

Redwood City, CA 94065

USA

Monday, April 23, 2007 – Thursday, April 26, 2007

I’m curious: if you signup for the course having learnt about it here, put in the notes that was the case. I’ll bring a special Pentaho shwag gift to anyone who signs up from this blog readership in the next two weeks!

PS – I know I know. Redwood City isn’t technically SFO but … whatever!

Honest Reflection: Am I done blogging?

Has anyone ever experienced “Bloggers Block?” You know, where you have plenty of things to write about, but are unable to select a topic and put some metaphorical pen to paper? I’ve been experiencing this since my return from extended holiday.

I arrived back and started work again at Pentaho. There’s been a bunch of developments at Pentaho (more open source features, key customer successes, etc), there’s been some interesting open source moves (licenses, alliances, etc), some interesting BI moves (vertica, hyperion acquisition, etc). Basically, I have a Blog Backlog of probably 25 or so juicy, page plusers. Things I would dig into, opine about, and hopefully help disseminate some useful information.

So… What’s the problem?

I just can’t. I’ve sat down and I look at my blog client and I just can’t seem to pick one, and write it. None of it seems worthy enough to be the first, or most interesting, or … Pick any reason, I’ve come up with it for why not to write on a subject.

Now that I’ve committed the cardinal sin of Apologetic Blogging (I aboslutely HATE reading blog posts entitled: Sorry I haven’t posted in a while) perhaps I should just turn it in. In fact, with so many people blogging these days it’s almost refreshing to hear “I don’t blog anymore.”

So I ask myself the question honestly: Am I done blogging? Is it worth the time? Is it worthwhile? Do I receive enough enjoyment from the writing to continue?

I don’t know. However, implicitly my next (possible) blog will answer that question, yes?

Back from Holiday

After a wonderfully refreshing extended holiday to South America, I’m back.

I sit today in front of my laptop, looking out the window at a swarthy, rainy Seattle day. The surprise? I’m kind of excited to get back to work, connect up with all my coworkers/partners/customers, and check out the new Pentaho releases while I was gone (Kettle 2.4.0, yippee).

Just sooooo much to blog about! Best get through that Inbox so I can do some real work tomorrow. 🙂

Going on a long walk

Someone once said

If you want to clear your mind go for a long walk. The longer the walk, the clearer the mind.

That’s good advice I say, and some that my wife and I are taking. Not that we need to clear our minds, but it’s our honeymoon of sorts we’ve been planning for almost two years.

We’re headed off for a two month trip to South America (Argentina and Chile). We won’t be checking emails, blogs, phone messages.

Signing off the Matrix for two months. Catch y’all in March!

JFree for WaterAid

David Gilbert, master behind the every popular JFreeChart, has initiated a campaign on behalf of JFree.org for Wateraid.

Bits matter, but people matter too. Consider donating on behalf of a very worthwhile open source project.

http://www.justgiving.com/jfree

Badgeware CEO to community: Buy a Commercial License

My jaw nearly hit the ground when I saw one of the badgeware company CEOs actually write, what most badgeware critics already know: they want you to buy a commercial license because you find the forced UI advertising unpallatable.

From Dave Rosenberg (Mulesource) blog on “Licensing in London“:

So, if you use Mule in your software product

and sell it commercially, then you are required to either make a

licensing deal with us or keep the �powered by Mule� logo visible. Just

as so many other things in OSS are confusing, it appears that this too

has created some consternation-primarily because people want to embed

Mule in their products and couldn’t quite make sense of how the

attribution would work.My answer was simple. You make a deal with us for a commercial license and then you do whatever you want.

WOW.

At least someone is finally admitting this is one of the intents of badgeware. Even if we disagree, it is important to say with genuine sincerity: thank you for being honest about why you use badgeware.

The bright side is at least these vendors are finally getting some pushback and having to explain their licenses. This is important… People need to know what they’re getting themselves into: forced advertising for vendors using a license that is, arguably, NOT an open source license. Badgeware (even **if** the OSI approves it) has implications for customers, developers, partners; in short, EVERYONE. People need to know that this is the kind of company/project/license they are dealing with.